Want to Run LLMs Locally? Read This for an Easy, Cost-Effective Solution

To run a Large Language Model (LLM) on your local machine, traditionally, you would require: Advanced technical expertise in LLMs, High-performance, costly GPUs, and A strong understanding of computer architecture.

But this process has been simplified thanks to Meta’s Llama, a powerful LLM. Meta introduced Ollama (Offline Llama), an offline service that makes deploying LLMs locally more accessible. With Ollama, you don’t need expensive hardware or cloud-based services. Developers of all levels can now run LLMs on their machines without breaking the bank or grappling with complex and costly hardware.

In this blog, I will walk you through how easy it is to get started with Ollama and use it for text-based and multimodal applications.

Getting Started

Firstly, we need to download Ollama click on this link and hit the download button. It supports three OS (Mac, Linux and Windows).

You can choose executable files according to your operating system and for Linux, you need to run this command.

curl -fsSL https://ollama.com/install.sh | sh

How to run OLLAMA?

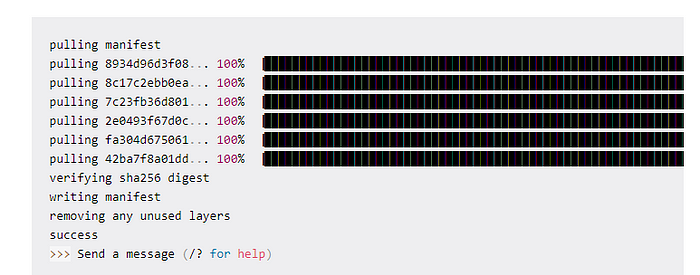

Once this is installed, open your terminal and run the command given below it is the same for all the platforms.

ollama run llama3

After this you can see an output as this:

Now, on your local system, you can interact with LLama. It would ask you to send a message or a prompt.

To exit the program you need to type /exit and you can exit from the program.

Using Ollama you can also use multimodal LLM models like LlaVa. A multi-modal model can take input not only of textual type but also images and can then generate a response accordingly.

How to run multi-modal LLM models?

Firstly, download the LlaVa model using the command

Ollama run llava

I have used this image to ask LlaVa to describe what’s in the picture and this is the response.

The image shows a person walking across a crosswalk at an intersection. There are traffic lights visible, and the street has a bus parked on the side. The road is marked with lane markings and a pedestrian crossing signal. The area appears to be urban and there are no visible buildings or structures in the immediate vicinity of the person.

How to Use Ollama with Python?

If you would want to use LLMs in your applications you can run Ollama as a server on your machine and run cURL requests.

But there are simpler ways. If you like using Python, you’d want to build LLM apps and here are some ways in which you can do it:

- Using the official Ollama Python library

- Using Ollama with LangChain

To use the Ollama Python library you can install it using pip like so:

$ pip install ollamaThere is an official JavaScript library too, which you can use if you prefer developing with JS.

Once you install the Ollama Python library, you can import it into your Python application and work with large language models. Here’s the snippet for a simple language generation task:

import ollamaresponse = ollama.generate(model='gemma:2b',

prompt='what is a qubit?')

print(response['response'])Using LangChain

Another way to use Ollama with Python is using LangChain. If you have existing projects using LangChain it’s easy to integrate or switch to Ollama.

If you have never heard of LangChain click here and learn more about it.

Make sure you have LangChain installed. If not, install it using pip:

$ pip install langchainHere’s an example:

from langchain_community.llms import Ollamallm = Ollama(model="llama2")llm.invoke("tell me about partial functions in python")

Using LLMs like this in Python apps makes it easier to switch between different LLMs depending on the application.

Why to use Ollama?

Running powerful LLMs locally used to be a complex and costly process, but Ollama changed the game. Here’s why it stands out:

No expensive hardware: You don’t need high-end GPUs to run Llama or other LLMs.

Privacy-first: Since Ollama operates offline, your data never leaves your machine, giving you complete control.

Multimodal capabilities: With models like LLaVa, you can interact with both text and images.

Conclusion

With Ollama, running a powerful LLM locally is no longer a pipe dream reserved for those with deep pockets or high technical expertise. Whether you’re working on sensitive data or just want the convenience of offline AI, Ollama provides an accessible and efficient solution for LLMs on your machine.

If you enjoyed this blog and want to stay updated on more AI tools and techniques, feel free to comment, follow, and share with your fellow AI enthusiasts!

Comments

Post a Comment