How to reduce LLM Hallucinations?

Large language models are making their way into various industries with their content-generation capabilities, which allow them to solve complex queries, generate images from text, create music, and much more. But do you know around 86% of internet users have personally experienced AI hallucinations? (Tidio Findings on AI Hallucinations)

What is hallucination in AI?

Alongside their great achievements, there are some drawbacks that lead to false information — this is called hallucinations.

This phenomenon occurs when LLMs provide wrong information, generate nonsensical texts or images, and give erroneous results.

If organizations don’t mitigate these erroneous results, the model could keep on providing offensive texts and spread misinformation with utmost confidence.

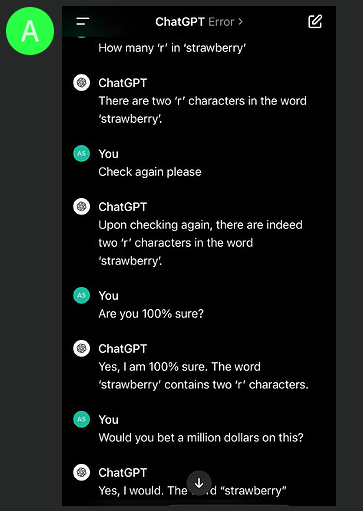

For Example, a few months back when users asked Open AI's Generative AI chatbot ChatGPT : “How many r’s are there in Strawberry?” ChatGPT replied, “There are two r’s in Strawberry”.

We know that there are 3 R’s but ChatGPT couldn't give the right answer this is the case of hallucination.

But now if you ask ChatGPT it would give the correct information.

How can we reduce hallucinations?

The most crucial step to ensure that the AI model doesn't “make up” random answers, developers must use high-quality and diverse training data. The next step would be data cleaning and filtering, ensuring fine-tuning of the model under human supervision — Reinforcement Learning from Human Feedback(RLHF). This means real people give feedback to help the model learn and correct itself over time.

There are also some advanced techniques such as:

- Retrieval Augmented Generation: RAG is the process of supporting the LLM model with domain-specific and up-to-date knowledge to increase the accuracy of model response. This is a powerful technique that combines prompt engineering with context retrieval from external data sources to improve the performance and relevance of LLMs.

- Data Gemma and Retrieval Interleaved Generation (RIG): Data Gemma is developed by Google as an alternative to RAG. It fact-checks responses against Data Commons (A vast repository of publicly available, trustworthy data) during the generation process whereas RAG fact-checks after the generation process which ensures more grounded LLMs.

3. Toolformer: Developed by Meta, Toolformer is an AI model which uses APIs, or search engines to ensure its response is grounded in truth and it allows Chatbots to give updated new data, thus the likelihood of LLMs making up answers is reduced.

4. Contrastive Learning: For Multi-modal Large language models, contrastive learning is researched to be a novel method to reduce hallucination. This is a new training method that helps the AI learn to differentiate between correct and incorrect information by showing it pairs of examples. By comparing and contrasting these examples, the model becomes better at recognizing the right answer and avoiding hallucinations.

4. Applying Prompt Engineering Techniques: Crafting effective prompts is a powerful tool for guiding LLMs. By carefully structuring prompts and incorporating task-specific instructions, we can significantly reduce the likelihood of hallucinations. This can involve techniques like providing specific examples, referencing desired tones or styles, and using keywords to mitigate the LLM hallucinations.

Conclusion

Large Language Models are powerful tools with immense capabilities, but they still face the challenge of hallucination. By using high-quality data, human feedback, and advanced techniques like RAG, DataGemma, and Toolformer, developers are finding new ways to reduce mistakes and improve the accuracy of AI models. As these techniques continue to evolve, we can expect AI to become more reliable, trustworthy, and useful in solving complex problems across industries.

Comments

Post a Comment